Ben Zenker

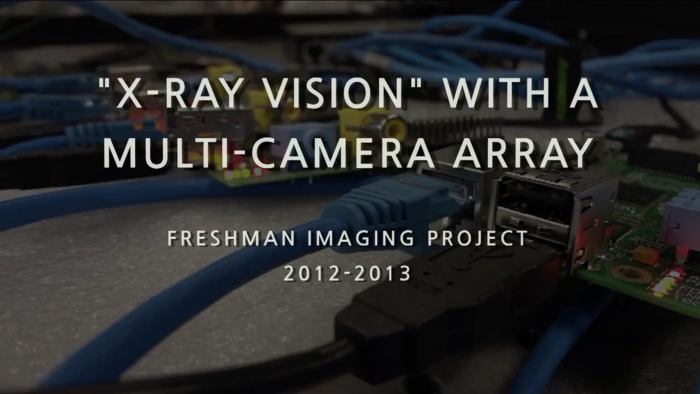

Multi-Camera Array

September 2012 - May 2013An experimental first-year college class in which we successfully designed, built, and delivered a multi-camera array that was capable of executing the synthetic aperture effect.

Product

The Freshman Imaging Project is a class that invites the Imaging Science and Motion Picture Science first-year students to collaboratively work on a year-long project that presents a real-world imaging problem in a student-driven work environment. Our class was tasked with the challenge of planning, designing, and building a multi-camera array system that would see through occlusions with the synthetic aperture effect. This course allowed us to grow in ways that a conventional lecture-based class could never have achieved.

When this project began, our Product Owner was the Director of Security at a local airport. Our product vision was to build a system to be used in catching airport baggage carousel thieves within a crowded atmosphere, which is data that a traditional camera would struggle to capture. This entire system was displayed and demonstrated at RIT’s annual innovation and creativity festival: Imagine RIT.

To understand more about this class and how it operated, watch this youtube video.

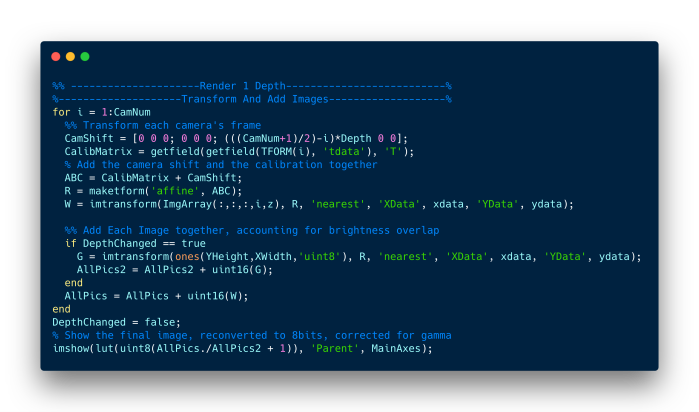

Code

I was a member of the software team, made up of 5-8 freshmen that had little knowledge of programming before the class. We successfully designed and built a software package that synced and calibrated all 6 cameras, and then processed the videos in real-time to give the user a final output.

I was an instrumental member of this team, applying what I had taught myself throughout the year in MATLAB. My contributions came from building a basic calibration tool, the design, and implementation of the User Interface, as well as leading the integration of each image processing software module that was built by the other members of the team.

This singular experience greatly influenced my journey into the software engineering world.

Science

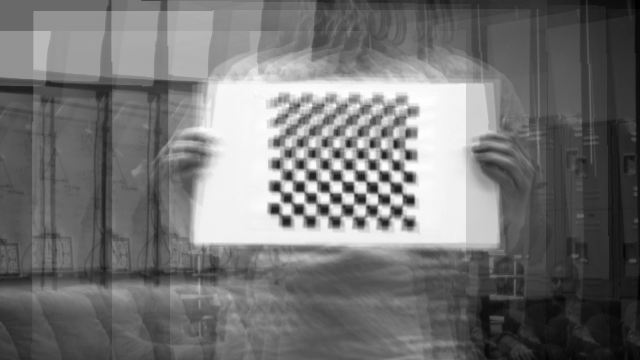

From Above: Using the images of a checkerboard, we can geometrically align the output of each camera to act as if their sensors were part of a singular, larger camera. Content such as this checkerboard was crucial in achieving calibration between each camera. Notice how the images are spread on both the X and Y axis -- as a result of faulty physical calibration when building our camera rig.

Our starting point for scientific research, gifted to us by our professor, was the following video: https://www.youtube.com/watch?v=QNFARy0_c4w

The basic concept of a synthetic aperture is: given geometric control over different regions of your imaging sensor, any focal depth can be chosen by the user, even after the image has been taken.

This is a powerful feature. Imagine taking an important photo or video, only to realize later that what you shot was out of focus. This was the business model of the company Lytro (who has since been purchased by Google), where you will see them reference their captures as light fields, as opposed to images.

Below is an example of a multi-camera array rig from a student at MIT, to give you a visual understanding of what the hardware might look like. You can view our hardware setup in the product section of this project.